Unraid Technical Summary & Documentation

A Builder’s technical guide to Unraid as a self-hosted AI + homelab platform.

1: What Unraid is - and when to choose it

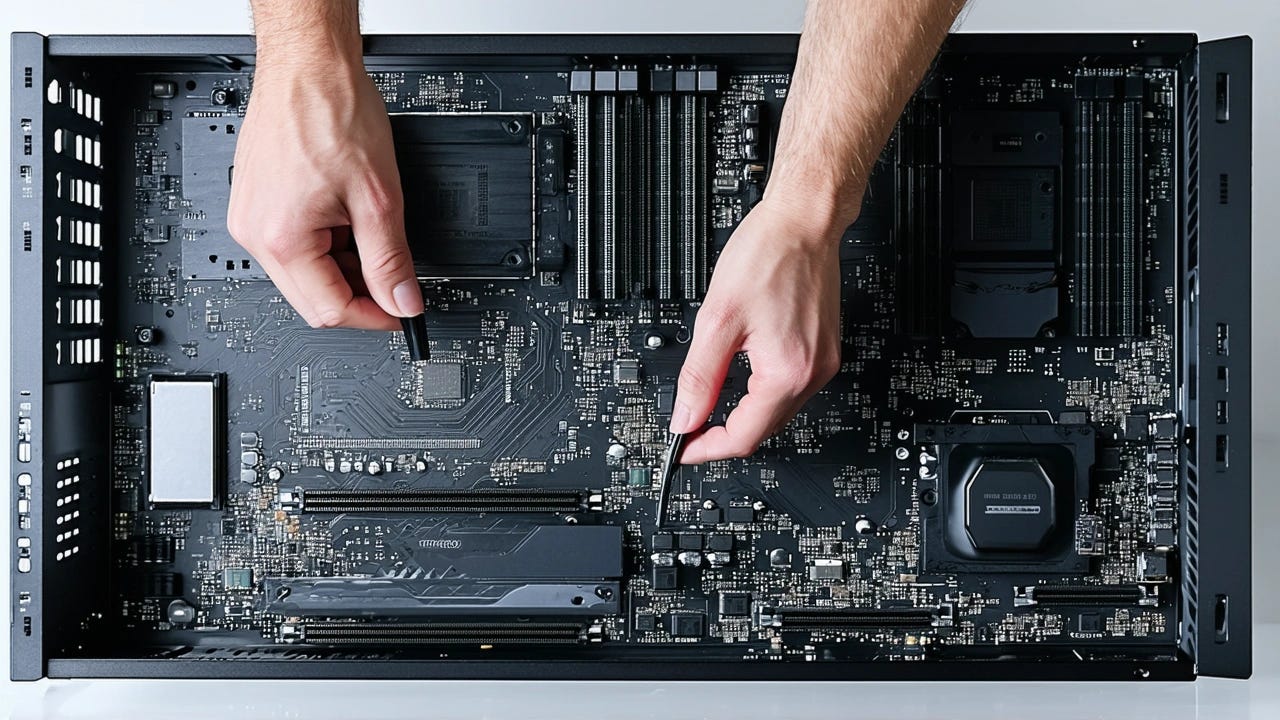

Unraid is a storage‑first Linux OS with an opinionated web UI. The pillars:

The Array - a set of individually formatted disks plus one or two parity disks for fault tolerance. Files are not striped; each file lives in full on one disk. You get easy incremental expansion, heterogeneous drive sizes, and graceful partial‑degradation behavior.

Pools - SSD or HDD pools outside the array, typically for cache, databases, or VMs. As of Unraid 7, ZFS pools are first‑class citizens. Multiple pools are common: e.g., nvme‑zfs for appdata, ssd‑zfs for VMs.

Shares - logical namespaces that aggregate top‑level folders across the array and pools. Share settings steer where data lands and how the mover reconciles between pools and the array over time.

Docker + VMs - a container runtime with Unraid templates, plus a KVM‑based VM manager with PCIe passthrough.

Choose Unraid when you want simple scaling with mismatched drives, a GUI‑first admin experience, and a strong app ecosystem without committing to striped RAID.

2: Core storage model

2.1 Array + parity

Up to two parity disks. Parity must be at least as large as your largest data disk.

Replacing a failed disk is online and straightforward; increasing sizes often uses parity‑swap when the new data disk exceeds current parity.

2.2 Pools: cache, metadata, and performance

Use one or more pools for performance‑sensitive data: container appdata, VM disks, databases, vector stores.

ZFS pools are ideal for integrity and snapshots; btrfs pools remain useful for flexible multi‑device configurations.

2.3 Shares and the mover

Shares act as policy. The key levers:

Primary storage - where new writes go.

Secondary storage - where the mover will relocate files when it runs.

Use cache/pool behavior - commonly “Yes” (write to pool, migrate to array) or “Prefer” (keep on pool, pull from array to pool). In Unraid 7, the UI clarifies these actions.

Split level, allocation, include/exclude - how files spread across array disks.

Default shares created by Unraid: appdata, domains, system, and isos. Keep appdata and system on a fast pool to avoid docker.img bloat and to keep databases snappy. VM images typically live in domains on a pool.

3: Docker on Unraid

3.1 Docker templates vs Compose

Templates are single‑service XML definitions curated by Community Applications - great for one‑offs and quick starts.

Compose shines for multi‑container stacks. Install the Docker Compose Manager plugin to use

docker composedirectly. Many upstream app docs provide ready‑made Compose files.

3.2 Where data lives

The docker image (docker.img) must only contain container binaries. Persistent paths should be bind‑mounted into

/mnt/user/appdata/or another share to avoid image‑full issues.

3.3 Networks: ipvlan vs macvlan

For containers that need their own IP, Unraid supports custom networks on

br0. Use ipvlan unless you specifically need macvlan behavior. This avoids host‑to‑container call traces and crashes seen with macvlan on some NICs. “Host access to custom networks” can re‑enable host reachability, but understand the trade‑offs.

3.4 GPUs and accelerators

Install the NVIDIA Driver plugin to expose GPUs to containers; for Intel iGPU telemetry, use Intel GPU TOP, and for dashboards, use GPU Statistics. Add the container runtime flags and device mappings required by each app.

4: Virtual machines

KVM/QEMU based. Require CPU virtualization + IOMMU enabled in BIOS.

Store VM disks on a fast pool. Snapshots and replication are simplest with ZFS.

PCIe passthrough for GPUs and USB controllers is well‑worn territory; keep IOMMU groups clean and watch for ACS overrides on consumer boards.

5: Networking patterns that work

Simple: One flat LAN, containers on bridge or host. Reverse proxy publishes selected services.

Isolated apps: Custom ipvlan on VLANs for internet‑facing containers. Treat VLANs as blast‑radius boundaries.

Remote access: Prefer VPN over exposing the WebGUI. Unraid includes WireGuard; Tailscale is also popular and lightweight.

Reverse proxy options:

Nginx Proxy Manager - easy SSL and host routing with a GUI.

Caddy - automatic TLS with a clean config; the caddy‑docker‑proxy plugin can autogenerate routes from labels.

6: Security posture

Set a strong root password day one. Do not expose the WebGUI directly to the internet. If remote management is needed, use WireGuard or Tailscale.

SSL for the WebGUI: provision and set Use SSL/TLS = Strict once DNS rebinding is handled cleanly. Keep a local fallback path for emergencies.

Follow least‑privilege for containers. Avoid

--privilegedunless you’re certain. Prefer explicit device and capability grants.Keep Unraid, plugins, and containers current. Review release notes before major upgrades.

7: Operations and lifecycle

7.1 Routine maintenance

Parity checks monthly or quarterly. Investigate SMART warnings early.

Flash backup automatically via Unraid Connect or download a zip after config changes.

Appdata backups nightly to another disk or pool, plus off‑site sync.

7.2 Replacing or upsizing disks

Simple replace: stop array, swap the disk, assign, start - Unraid rebuilds from parity.

Parity‑swap: when the new data disk is larger than current parity, copy parity to the new big disk, then reuse old parity as the data disk.

7.3 Mover realities

If files don’t move, it’s usually a Use cache/pool mismatch. “Yes” pushes to array; “Prefer” pulls to pool. Disable Docker/VMs when moving

appdataordomainsto avoid open files.

8: Backups you actually restore

Flash device: your license and config live here. Back it up routinely.

Appdata: use the Appdata Backup plugin for consistent snapshots with container stop/start. Then sync those backups off‑box.

VMs and pools: ZFS snapshots + replication are worth it for critical workloads. For btrfs, consider periodic send/receive or a file‑level backup tool.

9: Troubleshooting patterns

docker.img keeps filling - stop writing logs or state into the image; bind‑mount proper paths into

appdata.Mover not moving - verify the share’s Use cache/pool setting and that services are stopped while moving sensitive shares.

macvlan call traces - switch custom networks to ipvlan; re‑evaluate host access needs.

Can’t reach WebGUI after SSL changes - use the direct IP with http on LAN, or SSH +

use_sslfallback. Keep a console path ready.

10: Upgrading wisely

Skim the latest 7.1.x release notes before updating. Note hotfixes related to mover behavior in 7.1.2 and networking fixes in 7.1.4. If you skipped versions, scan the highlights back to 7.0.

After major updates, re‑check docker custom network type, GPU drivers, and plugin compatibility.

11: Opinionated starting points

One nvme ZFS pool for

appdataanddomains. Another SSD pool for scratch and transient data. Array of HDDs for bulk.Nginx Proxy Manager or Caddy with DNS validation for public‑facing services; everything else stays behind VPN.

Appdata Backup nightly to array, then rclone to object storage.

Monthly parity check. SMART email alerts. UPS integrated.

12: Quick reference - common tasks

Put appdata back on pool: stop Docker, set

appdatato Prefer, run Mover, then re‑enable Docker.Give a container its own IP: Settings → Docker → enable custom network on br0 with ipvlan; in template, choose that network and assign an IP outside DHCP but inside subnet.

Expose GPU to containers: install NVIDIA Driver plugin, add device mappings and runtime flags; validate with

nvidia-smiinside the container.Swap parity to grow a data disk: use the parity‑swap flow; label drives carefully, keep a console open, and don’t multitask the server during parity copy.

Back up the flash: Unraid Connect flash backup or Main → Flash → Flash Backup.

13: Appendix - useful plugins and add‑ons

Community Applications - the app store. Install this first.

Fix Common Problems - audits your config and surfaces misconfigurations.

Appdata Backup - snapshots appdata, can include VM metadata and flash; schedule it.

Unassigned Devices - mount external disks for cold backups or data ingress.

CA Auto Update Applications - scheduled container updates with notifications.

GPU Statistics - simple GPU telemetry in the dashboard.

Everything on Shared Sapience is free and open to all. However, it takes a tremendous amount of time and effort to keep these resources and guides up to date and useful for everyone.

If enough of my amazing readers could help with just a few dollars a month, I could dedicate myself full-time to helping Seekers, Builders, and Protectors collaborate better with AI and work toward a better future.

Even if you can’t support financially, becoming a free subscriber is a huge help in advancing the mission of Shared Sapience.

If you’d like to help by becoming a free or paid subscriber, simply use the Subscribe/Upgrade button below, or send a one-time quick tip with Buy me a Coffee by clicking here. I’m deeply grateful for any support you can provide - thank you!